This blog explains how to trigger Power BI dataset refreshes using an Azure Data Factory Web Activity.

Background:

The business requirement was to develop a solution to trigger Power BI dataset refresh from ADF after completing the ETL pipeline.

We were implementing the incremental data load process for which the ETL pipeline was designed to trigger three times a day. After completing each ETL, the Power BI dataset had to be refreshed to visualize the newly generated data in PBI Reports.

Solution:

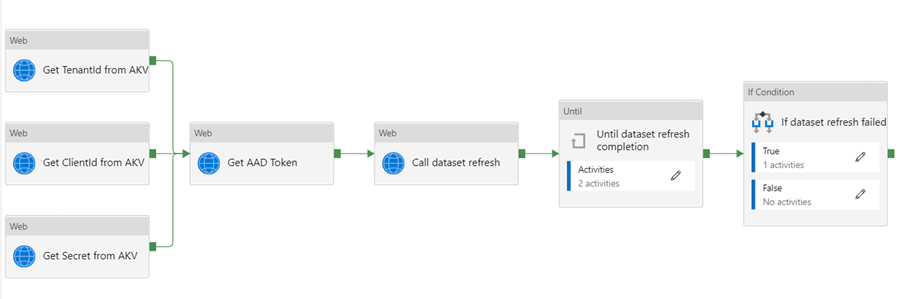

We created a pipeline in the azure data factory to automate PBI data set refresh after completing ETL pipeline. The pipeline has 5 stages:

- Grab the secret keys from the Azure Key Vault.

- Call the Azure Active Directory (AAD) authentication service and get the AAD token that we need to call the Power BI REST API.

- Use the Power BI REST API to trigger the actual dataset refresh.

- Check the Dataset Refresh Status.

- If Dataset refresh failed, Get the error message.

The Permissions Required:

- Power BI Tenant Administrator (or Azure Global Administrator)

- To create an AAD Security Group (Azure AAD Administrator)

- To create a Power BI App Workspace (V2) (by default a Power BI Pro user would be able to it, but might be disabled in your tenant)

- To create an Azure Key Vault, an Azure Data Factory, and to configure the Identity Access Management on the Key Vault (the OWNER role on an Azure Resource Group provides all of this)

Steps to the Solution:

- Create an AAD App Registration. Add a secret key.

- Create an AAD Security Group and add the SP object.

- In the Power BI tenant settings, register the Security Group for API access using Service Principles.

- Create a Power BI App Workspace (only Version 2/V2 is supported).

- Add the Service Principle as an admin to the Workspace.

- Create an Azure Key Vault and add the secrets involved.

- Create an Azure Data Factory.

- Make sure Data Factory can authenticate to the Key Vault.

- Create an Azure Data Factory pipeline (use my example).

The pipeline would like below after the completion of all the above steps: